6-ASKA Patents Brainstorm 20241031

Written by: Paul Lowndes <[email protected]>

Table of Contents

New Claims for Patents 1&2 re: security meshes

Rewritten Claim 1 (Decentralized Enforcement):

Rewritten Claim 2 (Hybrid Enforcement):

Claim 1: Secure UI Monitoring and Contextual Analysis within an Isolated Execution Environment

Claim 2: Secure and Verifiable AI Agent Update Mechanism within an Isolated Execution Environment

Claim 3: Consensus-Driven Security Orchestration with an AI Agent

NEW Patent P36: Secure and Adaptive Onboard AI Agent for Multi-Kernel Architectures

NEW claim for PATENT xx Dynamically Reconfigurable Trust Zones based on Real-Time Threat Assessment

NEW claim for PATENT xx : AI-Driven Predictive Security Policy Generation and Deployment

NEW PATENT 37: System and Method for Secure UI Interaction Auditing with Tamper-Evident Data Lineage

BRAINSTORM 20 NEW IDEAS FOR ASKA:

BRAINSTORM 10 MORE IDEAS FOR ASKA

Based on your provided text and diagrams for the Security Mesh, here are three novel independent claims, assigned to either Patent 1 or Patent 2:

A method for enhancing security within a modular isolated execution stack (IES) comprising a plurality of child IES instances, each child IES instance having dedicated processing, memory, and communication resources, the method comprising:

a. passively monitoring, by a Local Security Mesh (LSM), resource access patterns of said child IES instances, said monitoring performed out-of-band and without direct interaction with said resources, wherein said resource access patterns include at least one of: memory access patterns, storage access patterns, or peripheral device access patterns;

b. analyzing, by a Watcher Mesh, said resource access patterns for anomalies indicative of potential security threats, wherein said Watcher Mesh receives anomaly reports from said LSM via a unidirectional communication channel, preventing a compromised LSM from sending malicious instructions to said Watcher Mesh; and

c. communicating, by said Watcher Mesh, detected anomalies to a central analysis hub (AI Hub) via a bidirectional communication channel for further investigation and response coordination, wherein said AI Hub is logically and physically separated from said IES and said LSM, enhancing security and resilience against compromise.

You're seeking to refine the core claim of Patent 1, the foundation of ASKA. This requires careful consideration of what constitutes true novelty.

Review of Patent 1 Claim 1 and Proposed Claim 1new:

The current Patent 1 Claim 1 focuses on the structural elements of the IES, including hierarchical zones, mini-TRCs, dynamic partitioning, and capability-based communication. The proposed P1 Claim 1new shifts the focus to the method of enhancing security within an IES, emphasizing passive monitoring by the LSM and Watcher Mesh.

Categorization of Insights (Novel vs. Not Novel):

A secure computing system comprising a plurality of Modular Isolated Execution Stacks (IES), each IES comprising:

a. a hierarchy of Zones, each Zone associated with a localized Trust Root Configuration (mini-TRC) defining trust roots and policies, wherein said mini-TRC is stored on a tamper-evident storage medium;

b. a plurality of child IES instances, each associated with a Zone and comprising dedicated processing, memory, and communication resources, and a unique, cryptographically verifiable identifier;

c. a secure communication fabric between said child IES instances, including dynamically reconfigurable, capability-augmented communication channels;

d. a dynamic partitioning mechanism for securely managing child IES instances and their resources based on workload demands, security requirements, and trust policies; and

e. a hierarchical, out-of-band security monitoring system comprising: i. a Local Security Mesh (LSM) passively monitoring resource access patterns of child IES instances without direct interaction, enabling compromise resilience; ii. a plurality of Watcher Meshes, each associated with an LSM, passively monitoring said LSM and its associated child IES instances, receiving anomaly reports from said LSM via unidirectional channels; and iii. a central analysis hub (AI Hub), logically separated from said IES and LSM, receiving anomaly reports and analysis from said Watcher Meshes via bidirectional channels for investigation and response coordination.

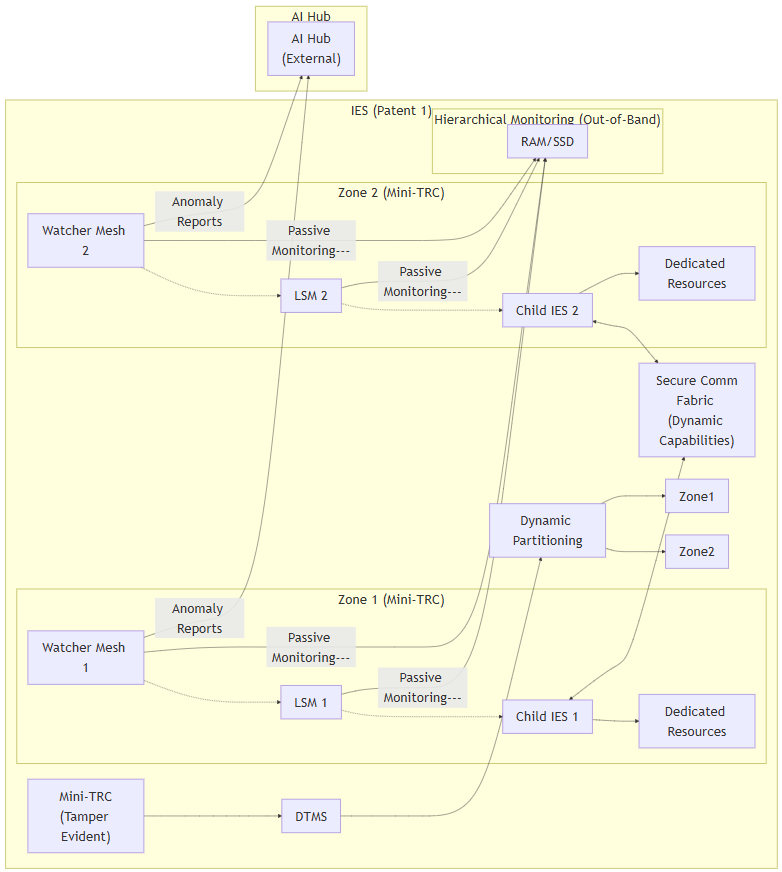

Diagram for Claim 1:

graph LR

subgraph "IES (Patent 1)"

direction LR

subgraph "Zone 1 (Mini#8209;TRC)"

ChildIES1[Child IES 1] --> Resources1["Dedicated Resources"]

LSM1["LSM 1"] -.-> ChildIES1

Watcher1["Watcher Mesh 1"] -.-> LSM1

end

subgraph "Zone 2 (Mini#8209;TRC)"

ChildIES2[Child IES 2] --> Resources2["Dedicated Resources"]

LSM2["LSM 2"] -.-> ChildIES2

Watcher2["Watcher Mesh 2"] -.-> LSM2

end

ChildIES1 <--> CommFabric["Secure Comm<br>Fabric (Dynamic Capabilities)"]

ChildIES2 <--> CommFabric

DTMS["DTMS"] --> Partitioning["Dynamic Partitioning"]

MiniTRC["Mini-TRC<br>(Tamper Evident)"] --> DTMS

Partitioning --> Zone1 & Zone2

subgraph "Hierarchical Monitoring (Out#8209;of#8209;Band)"

LSM1 -->|"Passive Monitoring"---| RAMSSD["RAM/SSD"]

LSM2 -->|"Passive Monitoring"---| RAMSSD

Watcher1 -->|"Passive Monitoring"---| RAMSSD

Watcher2 -->|"Passive Monitoring"---| RAMSSD

end

end

subgraph "AI Hub"

AIHub["AI Hub <br>(External)"]

Watcher1 --"Anomaly Reports"--> AIHub

Watcher2 --"Anomaly Reports"--> AIHub

end

Description of Diagram for Claim 1:

A method for enhancing security in a computing environment using a plurality of Modular Isolated Execution Stacks (IES), each comprising a hierarchy of Zones and a plurality of child IES instances, the method comprising:

a. defining, for each Zone within an IES, a localized Trust Root Configuration (mini-TRC) specifying trust roots and security policies, and storing said mini-TRC on a tamper-evident storage medium;

b. instantiating a plurality of child IES instances, each associated with a Zone and comprising: i. allocating dedicated processing, memory, and communication resources to each child IES instance; ii. assigning a unique, cryptographically verifiable identifier to each child IES instance; and iii. establishing secure communication channels between child IES instances using dynamically configurable, capability-augmented protocols;

c. dynamically partitioning said IES into said child IES instances based on real-time workload demands, security requirements, and trust policies defined in said mini-TRCs, securely migrating active processes between child IES instances while maintaining isolation and resource integrity;

d. managing trust relationships between child IES instances and across Zones using a Dynamic Trust Management System (DTMS), said DTMS utilizing said mini-TRCs, cryptographic identity verification, and dynamic trust metrics derived from observed behavior and declared security posture; and

e. passively monitoring resource access patterns and system behavior of said child IES instances and associated system resources using a hierarchical, out-of-band security monitoring system comprising: i. employing a Local Security Mesh (LSM) for each IES to monitor resource access patterns without direct interaction, enhancing compromise resilience and enabling detection of anomalies indicative of security threats; ii. utilizing a plurality of Watcher Meshes, each associated with an LSM, to passively monitor said LSM and its corresponding IES, receiving anomaly reports from said LSM via unidirectional communication channels, preventing compromised LSMs from influencing Watcher Meshes; and iii. communicating, by said Watcher Meshes, detected anomalies and security telemetry to a central analysis hub (AI Hub), logically separated from said IES and LSM, for further investigation and coordinated response.

Diagram for Claim 2:

graph LR

subgraph "IES Method (Patent 1 Independent Claim 2)"

DefineMiniTRC["a. Define mini#8209;TRC (Trust Roots, Policies)"] --> StoreMiniTRC["Store mini-TRC (Tamper-Evident)"]

subgraph "b. Instantiate Child IES"

Instantiate --> AllocateResources["Allocate<br>Dedicated Resources<br>(CPU, Memory, Comm)"]

Instantiate --> AssignID["Assign Cryptographic ID"]

Instantiate --> EstablishChannels["Establish<br>Secure Channels (Dynamic, Capability#8209;Augmented)"]

end

StoreMiniTRC --> Instantiate

subgraph "c. Dynamic Partitioning"

Partition["Dynamically Partition IES"]

AllocateResources --> Partition

AssignID --> Partition

EstablishChannels --> Partition

MiniTRC["mini-TRC"] --> Partition

DTMS["DTMS (Trust Management)"] --> Partition

Partition --> Migrate["Securely Migrate Processes"]

end

Migrate --> ManageTrust["d. Manage Trust Relationships (DTMS)"]

subgraph "e. Passive Monitoring"

ManageTrust --> PassiveMonitor["Passively Monitor Resources & Behavior"]

subgraph "Hierarchical Monitoring"

PassiveMonitor --> LSM["Employ LSM (Passive)"]

LSM --> WatcherMesh["Utilize Watcher Meshes (Passive)"]

WatcherMesh --> AIHub["Communicate to AI Hub (External)"]

LSM --"Anomaly Reports (Unidirectional)"--> WatcherMesh

end

end

end

Description for Diagram 2:

(See Independent Method Claim 2)

A system for decentralized security enforcement within a computing environment comprising a plurality of Isolated Execution Stacks (IES), each IES having a Local Security Mesh (LSM), the system comprising:

a. a plurality of Watcher Meshes, each Watcher Mesh associated with an LSM and configured to receive anomaly reports from said LSM via a unidirectional communication channel;

b. a distributed Consensus Engine, logically separated from said IES and LSM, said Consensus Engine receiving anomaly analysis and recommended actions from a central analysis hub (AI Hub) and said Watcher Meshes; and

c. a decentralized enforcement mechanism, wherein said Consensus Engine uses a distributed consensus protocol to determine and disseminate enforcement actions to said LSMs, enabling independent enforcement by each LSM based on the consensus decision and preventing a compromised AI Hub or Watcher Mesh from unilaterally enforcing actions.

A system for hybrid security enforcement within a computing environment comprising a plurality of Isolated Execution Stacks (IES), each IES having a Local Security Mesh (LSM), the system comprising:

a. a plurality of Watcher Meshes, each Watcher Mesh associated with an LSM and configured to receive anomaly reports from said LSM via a unidirectional communication channel;

b. a central analysis hub (AI Hub) receiving anomaly analysis from said Watcher Meshes and generating recommended actions, wherein said AI Hub communicates with a central management entity (ASKA Hub);

c. a staged enforcement mechanism comprising:

i. a limited action set, wherein said AI Hub can directly instruct said Watcher Mesh to perform limited, pre-approved actions; and

ii. a critical action set, wherein for critical actions, said AI Hub sends recommended actions to said ASKA Hub, which evaluates said actions against pre-defined policies and trust levels before issuing enforcement commands to said LSMs, limiting the AI Hub’s direct control and enhancing security.

A security monitoring system for a computing environment with multiple Isolated Execution Stacks (IES), each having a dedicated Local Security Mesh (LSM), the system comprising:

a. a hierarchical, out-of-band monitoring architecture including LSMs passively monitoring resource access patterns without direct interaction, Watcher Meshes passively monitoring LSMs and their associated IES while receiving anomaly reports from LSMs via unidirectional channels, and a Master Security Mesh (MSM) receiving aggregated reports from Watcher Meshes; and

b. a distributed Consensus Engine, separate from IES and LSMs, receiving anomaly analysis from a central AI Hub and Watcher Meshes, using a distributed consensus protocol to determine and disseminate enforcement actions to LSMs via unidirectional channels, preventing unilateral actions by compromised components.

A security monitoring system for a computing environment with multiple Isolated Execution Stacks (IES) each having a dedicated Local Security Mesh (LSM), the system comprising:

a. a hierarchical, out-of-band monitoring architecture including LSMs passively monitoring resource access patterns without direct interaction, Watcher Meshes passively monitoring LSMs and their associated IES while receiving anomaly reports from LSMs via unidirectional channels and communicating them to a central AI Hub and Master Security Mesh (MSM), and the MSM distributing security policies and updates to LSMs unidirectionally; and

b. a hybrid enforcement mechanism with a closed-loop pathway for the AI Hub to instruct Watcher Meshes to perform limited, non-disruptive actions, and an open-loop pathway where, for critical actions, the AI Hub sends recommendations to a ASKA Hub, which evaluates them against predefined policies and DTMS trust levels before issuing enforcement commands to LSMs, thereby limiting the AI Hub’s direct control over critical actions.

A security monitoring system for a computing environment comprising a plurality of Isolated Execution Stacks (IES), each IES associated with a dedicated Local Security Mesh (LSM), the system comprising:

a. a hierarchical, out-of-band monitoring architecture, comprising:

i. said LSMs passively monitoring resource access patterns of respective IES instances and associated system resources, said monitoring performed without direct interaction with said resources, enabling compromise resilience and enhanced system stability;

ii. a plurality of Watcher Meshes, each Watcher Mesh associated with an LSM and configured to:

1. passively monitor said LSM and its associated IES instance, mirroring said LSM’s passive monitoring of resources and providing a second layer of independent oversight;

2. receive anomaly reports from said LSM via a unidirectional communication channel, preventing compromised LSMs from influencing said Watcher Mesh; and

3. communicate anomaly reports and analysis to a central analysis hub (AI Hub) and a Master Security Mesh (MSM);

iii. said MSM receiving aggregated anomaly reports from said Watcher Meshes and distributing security policies and updates to said LSMs via unidirectional communication channels, preventing compromised LSMs from propagating malicious instructions; and

b. a hybrid enforcement mechanism comprising:

i. a closed-loop pathway for limited actions, wherein said AI Hub can directly instruct said Watcher Meshes to perform a pre-defined set of limited, non-disruptive actions within their associated IES instances, enabling rapid response to low-risk anomalies; and

ii. an open-loop pathway for critical actions, wherein said AI Hub communicates recommendations for critical actions to a central management entity (ASKA Hub), which evaluates said recommendations against pre-defined policies and trust levels derived from a Dynamic Trust Management System (DTMS) before issuing enforcement commands to said LSMs, limiting the AI Hub’s direct control over high-impact actions and enhancing security.

Rationale for Assigning to Patent 2:

This claim focuses on the interaction and communication between various security components (LSMs, Watcher Meshes, MSM, AI Hub, ASKA Hub). This aligns with Patent 2's focus on inter-IES communication and adaptive security. While the LSM is mentioned, the claim's emphasis is on the overarching system and the communication pathways, not the internal workings of an individual IES. The claim also explicitly addresses the hybrid enforcement mechanism (closed-loop and open-loop), which is a key innovation in mitigating feedback loop vulnerabilities. The hierarchical and out-of-band nature are also features detailed in Patent 2's specifications.

Detailed Analysis of the Diagram and its Relationship to the Claim:

The diagram provides a visual representation of the claim's elements:

This claim, informed by a thorough analysis of the diagram, aims to comprehensively capture the novel aspects of the Security Mesh architecture and its integration within the ASKA system. By addressing both the monitoring architecture and the hybrid enforcement mechanism, the claim strengthens the patent's protection of this key innovation.

A system for secure user interface (UI) monitoring and analysis within a secure computing environment, comprising:

a. an isolated execution environment (IES) hosting an AI agent, said IES providing hardware-enforced isolation from other system components, including said UI;

b. a UI monitoring module configured to passively observe user interactions with said UI via a unidirectional communication channel, preventing said AI agent from directly manipulating said UI;

c. a data sanitization and filtering module within said IES receiving data from said UI monitoring module, removing potentially malicious or sensitive information before providing said data to said AI agent; and

d. a contextual analysis engine within said IES, said engine processing sanitized UI interaction data, correlating said data with system events and security telemetry, and generating contextualized alerts and recommendations, enhancing security by incorporating user context into anomaly detection and threat analysis.

A method for securely updating an AI agent operating within an isolated execution environment (IES), the method comprising:

a. receiving a digitally signed update package via a secure communication channel;

b. verifying the digital signature of said update package and its integrity using a trust root configuration (TRC);

c. creating an isomorphic model of the target system within a sandboxed environment within said IES, said isomorphic model reflecting the structure, communication patterns, and functionalities of the system without exposing sensitive data;

d. validating said update package within said sandboxed environment using said isomorphic model, generating validation results; and

e. conditionally installing said update package within said IES based on said validation results, wherein a rollback mechanism is provided to revert to a previous version of said AI agent in case of failure or security violations, preserving system integrity and availability.

A system for security orchestration within a secure computing environment, comprising:

a. a master security mesh (MSM) monitoring system events and generating security alerts;

b. an AI agent operating within an isolated execution environment (IES), receiving security alerts from said MSM and user interaction data from a secure UI monitoring module;

c. a plurality of AI modules within said AI agent, each AI module independently analyzing data from a respective source and proposing security actions, said sources including at least one of: system events, user behavior, threat intelligence feeds, or audit logs;

d. a consensus engine, logically separated from said MSM and AI agent, receiving proposed security actions from said AI modules and using a distributed consensus protocol to determine a final security action, enhancing security by preventing any single compromised AI module from unilaterally enforcing potentially disruptive actions; and

e. a response system executing said final security action, said response system comprising at least one of: an isolator for isolating compromised components, a self-healer for restoring system integrity, or a resource manager for adjusting resource allocation.

Preliminary Novelty Assessment and Rationale:

These claims address novel aspects of ASKA's AI agent integration:

Patent 11: Secure UI Subsystem with Hierarchical Isolation, Unidirectional Communication, and Consensus-Driven Security Orchestration

Secure UI Subsystem with Hierarchical Isolation, Enhanced Security Update Mechanism, and Decentralized Security Orchestration

Revised Abstract:

This invention introduces a secure UI subsystem with hierarchical isolation, unidirectional communication, and consensus-driven security orchestration. The dedicated UI Kernel operates in complete hardware isolation, with a secure communication bus enforcing unidirectional data flow from IES instances to the UI Kernel, preventing reverse communication attacks. A multi-region display buffer with dynamically adjustable trust levels, governed by declarative policies and enforced by a hardware Display Validation Module, enhances security and supports flexible UI rendering. Hardware-enforced control-flow integrity (CFI) protects the UI Kernel's execution flow. A dedicated TRC and Policy Engine provide granular control over UI security. A Secure UI Integration Module facilitates communication with the ASKA Hub, using SIZCF for inter-zone communication and supporting remote attestation. A secure AI agent update mechanism, using isomorphic model validation within a sandboxed environment and a secure rollback capability, ensures the integrity of the AI agent. Consensus-driven security orchestration, using multiple AI modules and a consensus engine logically separated from the MSM and AI agent, enhances security and prevents unilateral actions by potentially compromised components. A response system executes the consensus decision, providing isolation, self-healing, and resource management capabilities.

Claims:

1. A secure user interface (UI) system for a computing environment comprising a plurality of Modular Isolated Execution Stacks (IES) organized into a hierarchy of Zones, each Zone associated with a Trust Root Configuration (TRC) stored on a decentralized, tamper-proof ledger, the UI system comprising:

a. a dedicated UI Kernel operating in complete hardware isolation from said IES instances, said UI Kernel further comprising:

i. a dedicated CPU and physically isolated memory with hardware-enforced segmentation, preventing unauthorized access from other components;

ii. a secure, unidirectional, hardware-enforced communication bus connecting said IES instances to said UI Kernel, preventing reverse communication attacks and enforcing data flow control;

iii. a multi-region display buffer, each region assigned a dynamically adjustable trust level based on the origin and sensitivity of displayed information, governed by declarative policies expressed in a policy language, wherein said policies specify permitted actions and data types for each trust level and region;

iv. a hardware-based Display Validation Module ensuring the integrity and authenticity of content rendered within each region of said display buffer, utilizing checksums, digital signatures, or a combination thereof for each data element rendered, and further logging all validation events to a tamper-proof audit log on said decentralized ledger; and

v. a hardware-enforced control-flow integrity (CFI) mechanism protecting the UI Kernel's execution flow from unauthorized modification or hijacking, said CFI mechanism integrated with access control policies based on program execution states and dynamically updated based on trust levels of interacting IES instances;

b. a Trust Root Configuration (TRC) specific to said UI Kernel, said TRC stored on said decentralized ledger and cryptographically linked to the UI Kernel’s identity, defining trust roots, trust policies, and access control rules specific to the UI Kernel and its associated Zone, wherein said TRC is verifiable by other ASKA components;

c. a Policy Engine within said UI Kernel interpreting and enforcing said declarative policies, dynamically adjusting trust levels of display regions based on the origin of UI components, data sensitivity labels, real-time threat assessments, and changes propagated from the ASKA Hub, and controlling access to UI resources based on a combination of trust levels, dynamically issued capabilities (Patent 2), and TRC policies; and

d. a Secure UI Integration Module facilitating secure communication between the UI Kernel and the ASKA Hub, leveraging the Secure Inter-Zone Collaboration Framework (SIZCF - Patent 22) for authenticated and encrypted inter-zone communication, coordinating policy updates from the ASKA Hub, supporting remote attestation of the UI Kernel using hardware-rooted trust mechanisms, and maintaining a session-specific audit trail on said decentralized ledger.

13. A method for securely updating an AI agent operating within an isolated execution environment (IES), the method comprising:

a. receiving a digitally signed update package via a secure, authenticated communication channel, wherein said channel is established using mutual authentication between a trusted authority and said IES;

b. verifying the digital signature and integrity of said update package using a trust root configuration (TRC), including checks for version compatibility and policy compliance, rejecting the update if verification fails;

c. creating an isomorphic model of the target system within a sandboxed environment within said IES, said isomorphic model mirroring the structure, communication patterns, and functionalities of the target system, including security policies and trust relationships, without exposing sensitive data or live system connections;

d. validating said update package within said sandboxed environment using said isomorphic model, generating detailed validation results that are logged to a tamper-proof audit trail; and

e. conditionally installing said update package within said IES based on said validation results and authorization from a central management entity, wherein a secure rollback mechanism is provided to revert to a previous, validated version of said AI agent in case of failure or security violations, ensuring system integrity and availability, and wherein all update and rollback actions are logged to a tamper-proof audit trail.

20. A system for consensus-driven security orchestration within a secure computing environment, comprising:

a. a master security mesh (MSM) monitoring system events, aggregating security telemetry from local security meshes (LSMs) associated with isolated execution environments (IES), and generating security alerts based on pre-defined rules, anomaly detection patterns, and threat intelligence feeds;

b. an AI agent operating within an isolated execution environment (IES), receiving security alerts from said MSM and user interaction data from a secure UI monitoring module via a unidirectional communication channel, wherein said UI monitoring module passively observes user interactions without influencing said UI;

c. a plurality of diverse AI modules within said AI agent, each AI module independently analyzing data from a respective source (including system events, user behavior, threat intelligence feeds, and/or audit logs) and proposing security actions, wherein said AI modules employ diverse analytical techniques and algorithms, and wherein the trustworthiness of each AI module is dynamically assessed based on its performance, accuracy, and adherence to pre-defined security policies;

d. a consensus engine, logically and physically separated from said MSM and AI agent and operating within a trusted execution environment, receiving proposed security actions from said AI modules, dynamically weighting said actions based on trustworthiness, and using a distributed consensus protocol to determine a final security action; and

e. a response system executing said final security action after authorization from a central management entity (ASKA Hub), said response system comprising:

i. an isolator for securely isolating compromised components, including network segmentation, capability revocation, and IES termination;

ii. a self-healer for restoring system integrity through automated rollback mechanisms, software updates, and reconfiguration of affected components; and

iii. a resource manager for dynamically adjusting resource allocation to support isolation and self-healing actions, minimizing disruption to other system processes.

Abstract: This invention discloses a secure and adaptive onboard AI agent for enhancing security within a multi-kernel computing architecture, such as ASKA. The AI agent operates within a hardware-isolated execution environment (IES), ensuring its integrity and protection against compromise. A secure UI monitoring module passively observes user interactions, providing context for the AI agent's analysis without allowing the agent to manipulate the UI. The AI agent integrates with ASKA components (DTMS, MSM, AESDS, Decentralized Ledger) through secure communication channels and capability-based access control, receiving security telemetry, trust information, and software updates. A local LLM engine, securely storing model weights and managing context, provides AI capabilities within the isolated environment. A secure API allows controlled access to the AI agent's functionalities by other ASKA components. Optional integration with Federated Learning and external systems expands the agent's potential applications. This architecture provides a robust and adaptive AI-powered security enhancement, protecting user privacy, ensuring system integrity, and enabling dynamic adaptation to evolving threats.

Claim 1: A secure onboard AI agent system for a multi-kernel computing architecture comprising a plurality of Isolated Execution Stacks (IES), a Dynamic Trust Management System (DTMS), a Master Security Mesh (MSM), and an Automated Evolutionary Software Development System (AESDS), the AI agent system comprising:

a. a dedicated IES instance for hosting said AI agent, providing hardware-enforced isolation from other system components and the external network;

b. a secure UI monitoring module within said IES, passively observing user interactions with a Secure UI Kernel via a unidirectional communication channel, capturing user input, UI state, and system events without influencing said UI;

c. a local Large Language Model (LLM) engine within said IES, comprising: i. secure model storage for encrypting and storing LLM model weights; ii. an inference engine for executing said LLM model; iii. a tokenizer for preprocessing text input; and iv. a context management module for maintaining conversation context and history;

d. a set of ASKA integration modules within said IES, comprising: i. a DTMS integration module for receiving trust information and policy updates from said DTMS via secure communication channels and dynamically adjusting the agent’s behavior based on said trust information; ii. an MSM integration module for receiving security alerts and anomaly reports from said MSM via unidirectional communication channels; iii. an AESDS integration module for securely receiving and applying software updates and patches for said AI agent and LLM engine; and iv. a Decentralized Ledger integration module for securely logging agent activity and API interactions to a tamper-proof audit trail;

e. a secure API within said IES, enabling controlled access to said AI agent’s functionalities by other ASKA components via capability-based access control; and

f. an Aggregation and Processing Hub, external to said IES but within the secure computing architecture, securely receiving outputs from said AI agent via said secure API, correlating said outputs with data from other ASKA components, and disseminating results to authorized components and users via secure communication channels, wherein access to said results is controlled by capability-based access control.

Claim 2: A method for enhancing security in a multi-kernel computing architecture using an onboard AI agent, the method comprising:

a. passively monitoring user interactions with a Secure UI Kernel within a dedicated, isolated execution environment (IES) using a unidirectional communication channel;

b. processing the monitored UI interactions with a local Large Language Model (LLM) engine within said IES, leveraging context and ASKA-specific knowledge;

c. generating insights, recommendations, or security actions based on the processed UI interactions and other ASKA telemetry received via secure integration modules;

d. securely communicating the generated outputs to an Aggregation and Processing Hub via a secure API within said IES; and

e. correlating the AI agent's outputs with data from other ASKA components within said Hub and disseminating the results to authorized components and users via secure communication channels.

Assessment of Claims:

Let's devise two novel, robust independent method claims for ASKA, focusing on addressing potential gaps and inspiring new architectural diagrams.

Claim 1: Dynamically Reconfigurable Trust Zones based on Real-Time Threat Assessment

A method for dynamically reconfiguring trust zones within a secure computing system comprising a plurality of Isolated Execution Stacks (IES) and a Dynamic Trust Management System (DTMS), the method comprising:

a. Continuously monitoring system events, network traffic, and user behavior within each IES using a combination of hardware and software sensors;

b. Analyzing the monitored data using an AI-powered threat assessment engine to identify potential security risks and assign dynamic trust scores to each IES;

c. Adapting trust zone boundaries in real-time based on the dynamic trust scores, wherein an IES can dynamically join or leave a trust zone based on its assigned trust score, enforcing appropriate access controls and security policies based on the dynamic trust zones;

d. Recording all trust zone changes and related security events on a distributed immutable ledger, ensuring auditability and transparency, wherein each trust zone maintains an isolated audit log;

e. Triggering re-attestation of IES instances joining a new trust zone based on dynamic trust configuration thresholds, said re-attestation including the creation of tamper-evident microstructures logged to said immutable ledger to ensure provenance tracking and provenance analysis during or after these attestation attempts; and

f. Providing user notifications through an out-of-band channel that can be disconnected, with user-configurable notification policies defining types and severity levels of events to notify users about trust zone changes or other information, wherein sensitive notifications containing potential secrets undergo AI-driven redaction (Patent P35) and other sanitization methods based on policy before being presented, with user notifications being ephemeral or archived within secure enclaves.

A method for proactive security policy management in a multi-kernel computing environment comprising a plurality of Isolated Execution Stacks (IES) and a Dynamic Trust Management System (DTMS), the method comprising:

a. Monitoring runtime security parameters, including software attestation logs (Patent P33), resource utilization reports, communications analysis, user activity, intrusion detection triggers, threat intelligence, resource conflicts (Patent P9), capability and consent denials (Patent P2), UI rendering analysis (Patent P11), hardware health diagnostics (Patent P7), memory protection logs, network firewall (P3) event triggers, secure data storage access logs (P24), zone transitions (P22), security configuration validation data, resource sharing negotiations (Patents P1, P9), trust establishment and violation attempts (P4), system audit logs, model validations and training (Patents P16, P19, P20), network intrusions and denial of service (DOS) attempts, AI security alerts and predictions (Patents P2, P7, P15), user- and entity behavior analytics, external authentication and authorization records, application state and environment metadata, policy status and conflicts (Patent P15), or key management logs (P27, P28, P29);

b. Generating a vector embedding for the collected monitoring parameters;

c. Employing a predictive AI model, trained offline on labeled historic security incidents or system simulations (with attack simulations), to anticipate potential threats and security policy needs by receiving and processing the parameter vector;

d. Synthesizing declarative security policies within isolated execution stacks (IES) and secure execution enclaves, expressing policies in a domain-specific language (DSL);

e. Deploying synthesized security policies, validated and verified through isomorphic validation against synthesized simulated scenarios, leveraging capabilities to restrict distribution and modification;

f. Using policy voting from federated zones, where each node with existing and new policies for overlapping context vote based on policies’ weights; g. Providing an API to query security incident predictions, expected risks, policy justification, recommended configurations, simulated outcomes, threat level trends, validation report details, policy coverage status, model training specifics, network and zone statistics, key strength predictions (based on observed attempted intrusions and patterns analysis on known breaches, AI predictions, entropy level, etc.), hardware performance or stability forecasts; and

h. Receiving policy review or policy change suggestions for human override of automatically generated policies based on individual trust weights for security review committee for specific policy domains and for groups and committees across domains where overlaps exists.

Both of these new claims center around novel method-based strategies for security enhancements for ASKA with hooks back to existing subsystems to more tightly couple into those capabilities, using AI and other enhancements in useful and security-centric ways while also creating a framework by which human control is present and adaptable with a variety of configuration knobs in a robust administrative dashboard. These also inspire other new areas, such as data lineage for auditing on secure user input tracking for UI integrity over the wire and using AI, blockchain or even 3D printed audit trails where data sanitization or potentially malicious input modification occurred from the system or AI's side before showing information in UI to the user - giving additional levels of confidence, safety and verifiable trust at any historical point of time via rapid analysis tools and technologies - another broad system enhancement for another Patent and set of patent Claims

You've proposed a very interesting and valuable security enhancement for ASKA related to UI integrity and data lineage. Here's a breakdown and how it fits into the patent portfolio:

Concise Explanation:

The idea is to create a tamper-proof audit trail of all user interactions with the Secure UI, including any modifications made by ASKA (sanitization, filtering, etc.). This audit trail would leverage AI, blockchain, or 3D microstructures to ensure integrity and verifiability, providing enhanced transparency and trust. This would allow for detailed forensic analysis of UI interactions, enabling detection of malicious activity, system errors, or policy violations.

Patent Portfolio Integration (New Patent - P37):

Title: System and Method for Secure UI Interaction Auditing with Tamper-Evident Data Lineage

Abstract: This invention discloses a system and method for creating a secure and verifiable audit trail of all user interactions with a Secure UI within a multi-kernel computing architecture like ASKA. The system captures all user inputs, system modifications to those inputs, and the final rendered output, creating a complete data lineage. This lineage is secured using a combination of AI-driven anomaly detection, blockchain technology for immutability, and/or 3D microstructures for physical tamper-evidence. This comprehensive approach ensures the integrity and verifiability of UI interactions, enabling detailed forensic analysis, enhancing user trust, and providing robust protection against UI manipulation or data corruption.

Claim 1: A system for secure UI interaction auditing within a secure computing environment comprising a Secure UI Kernel and a plurality of Isolated Execution Stacks (IES), the system comprising:

a. a UI Input Capture Module within said Secure UI Kernel capturing all user inputs, including keystrokes, mouse movements, and touch events, associating each input with a timestamp and a unique identifier;

b. a System Modification Log recording any modifications made by the system to user inputs, including sanitization, filtering, and formatting, associating each modification with the corresponding input identifier;

c. a UI Output Capture Module capturing the final rendered output presented to the user by the Secure UI Kernel, associating the output with the corresponding input identifier;

d. a Data Lineage Builder correlating user inputs, system modifications, and rendered outputs using said identifiers, creating a complete, time-ordered data lineage for each UI interaction;

e. a Tamper-Evident Log storing said data lineage using at least one of: i. a distributed, immutable ledger (blockchain) recording cryptographic hashes of each element in the data lineage; ii. a 3D microstructure fabrication module generating unique physical microstructures representing cryptographic hashes of elements in the data lineage; or iii. an AI-driven anomaly detection module analyzing the data lineage for inconsistencies or deviations from expected behavior, generating alerts and logging anomalies to a secure audit log; and

f. a Secure UI Interaction Analysis Tool enabling authorized users to query and analyze the tamper-evident log, providing tools for visualizing UI interactions, reconstructing user sessions, identifying anomalies, and generating audit reports.

Relationship to Existing Patents:

Novelty:

The novelty of Claim 1lies in the combination of:

Claim 2 (Method Claim):

A method for auditing user interactions with a Secure UI within a secure computing environment, the method comprising:

a. capturing, by a UI Input Capture Module, all user inputs presented to a Secure UI Kernel, associating each input with a timestamp and a unique identifier, wherein said inputs include at least one of: keystrokes, mouse movements, touch events, or audio input;

b. logging, by a System Modification Log, all modifications made to said user inputs by the system, including sanitization, filtering, redaction, transformation, formatting, or augmentation, associating each modification with the corresponding input identifier and a timestamp;

c. capturing, by a UI Output Capture Module, the final rendered output displayed to the user by said Secure UI Kernel, associating said output with a timestamp and the corresponding input identifier, wherein said output can include display elements and/or audio output;

d. constructing, by a Data Lineage Builder, a complete, time-ordered data lineage for each UI interaction by correlating captured user inputs, logged system modifications, and captured rendered output using said identifiers and timestamps;

e. creating a tamper-evident record of said data lineage using at least one of: i. generating a cryptographic hash of each element within the data lineage and recording said hashes on a distributed, immutable ledger; ii. fabricating a unique physical microstructure for each element within the data lineage, wherein said microstructure physically embodies a representation of a cryptographic hash of the corresponding element; or iii. analyzing said data lineage using an AI-driven anomaly detection module to detect inconsistencies or deviations from expected behavior, logging detected anomalies and generating corresponding alerts; and

f. analyzing, by an authorized user utilizing a Secure UI Interaction Analysis Tool, said tamper-evident record of the data lineage to visualize user interactions, reconstruct user sessions, perform forensic analysis, and generate audit reports, wherein said tool provides functionality to query and filter the data lineage based on user, timestamp, type of input, type of modification, or other relevant criteria, and further enabling redaction of sensitive information during forensic analysis before presenting the data to an auditor.

A system for secure communication and scalable key recovery in a distributed computing environment, comprising:

a. a Quantum Phase Tunneling Framework utilizing point-to-multipoint cascading quantum entanglements to manage transitions of secure data between quantum-protected and traditional communication channels, incorporating robust integrity checks during data transfer and optimized for efficient key recovery operations in multi-geographical network segments;

b. a Dynamic Operator-Cluster Failover Mechanism for activating backup consensus pathways and rerouting secure data during consensus breakdowns or critical failures, ensuring continuous operation and enabling rapid restoration of data flow for key recovery;

c. an Adaptive Security and Privacy Balancing Algorithm that dynamically optimizes resource allocation for quantum resources, adjusting tunnel bridging strategies according to prevailing operational conditions and projected quantum phase integration theories and dynamically balancing security, privacy and system resource constraints by adapting resource management priorities, communication parameters and entanglement strategies according to policies, trust levels and anticipated performance needs; and

d. a Transparent Cryptographic Attestation Process that generates verifiable cryptographic proofs of operational integrity from each node, monitored by distributed verifiers using a consensus protocol for real-time security validation and enhanced system trust, with all attestations securely logged to a tamper-evident, distributed immutable ledger (blockchain) and further secured through physically unclonable function (PUF) based authentication.

The paper on a Modified Firefly Optimization Algorithm for intrusion detection offers intriguing ideas adaptable to ASKA. Here are some novel security architecture enhancements inspired by it:

These novel features tie back into your earlier innovations by enhancing them with nature-inspired principles and introducing more dynamic and adaptive responses to security threats. They suggest the following diagrams to incorporate these additions:

graph LR

subgraph ASKA Network

ZoneA[Zone A] --> NIC_A["Network Interface<br>(Zone A)"]

ZoneB[Zone B] --> NIC_B["Network Interface<br>(Zone B)"]

ZoneN[Zone N] --> NIC_N["Network Interface<br>(Zone N)"]

NIC_A --> MCN["Multi-Channel Network (MCN)"]

NIC_B --> MCN

NIC_N --> MCN

subgraph "Dynamic Security Policy<br>Deployment"

MCN --> PolicyEngine["Policy Engine (P4)"]

PolicyEngine --> HealthMonitor["Health Monitor<br>(AI Agent)"]

HealthMonitor -->|"Health Function<br>Data"---| DLT["Decentralized Ledger (DLT)"]

HealthMonitor -->|"Attestation Request"---| HWAttestation["Hardware Attestation<br>(3D Microstructures)"]

HWAttestation --> DLT

DLT --> PolicyUpdates["Dynamic Policy<br>Updates"]

PolicyUpdates --> PolicyEngine

end

MCN --> Firewall["Firewall (P3)"]

Firewall --> External["External Networks"]

subgraph Security Alerting

HealthMonitor --> AlertGen["Alert Generator (P7)"]

AlertGen -- Secure Channel (P3) --> SecurityConsole["Security Console"]

AlertGen --> DLT

end

end

Diagram Explanation:

Key Points:

graph

subgraph ASKA Zone A

direction LR

IES_A1[IES 1] --> Agent_A1["AI Agent (Swarm)"]

IES_An[IES n] --> Agent_An["AI Agent (Swarm)"]

Agent_A1 --> API_A["Agent API"]

Agent_An --> API_A

API_A --> Health_A["Health Function<br>(Zone A)"]

Health_A --> DLT_A["Decentralized Ledger (DLT)"]

DLT_A --> Attestation_A["Attestation (3D Microstructures)"]

end

subgraph ASKA Zone B

direction LR

IES_B1[IES 1] --> Agent_B1["AI Agent (Swarm)"]

IES_Bn[IES n] --> Agent_Bn["AI Agent (Swarm)"]

Agent_B1 --> API_B["Agent API"]

Agent_Bn --> API_B

API_B --> Health_B["Health Function<br>(Zone B)"]

Health_B --> DLT_B["Decentralized Ledger (DLT)"]

DLT_B --> Attestation_B["Attestation (3D Microstructures)"]

end

subgraph ASKA Zone N

direction LR

IES_N1[IES 1] --> Agent_N1["AI Agent (Swarm)"]

IES_Nn[IES n] --> Agent_Nn["AI Agent (Swarm)"]

Agent_N1 --> API_N["Agent API"]

Agent_Nn --> API_N

API_N --> Health_N["Health Function<br>(Zone N)"]

Health_N --> DLT_N["Decentralized Ledger (DLT)"]

DLT_N --> Attestation_N["Attestation (3D Microstructures)"]

end

subgraph "ASKA Mesh & Resource Sharing"

Attestation_A --> Mesh

Attestation_B --> Mesh

Attestation_N --> Mesh

Health_A --> Mesh

Health_B --> Mesh

Health_N --> Mesh

Mesh["ASKA<br>Mesh<br>(Inter-Zone Comm)"] --> Consensus["Consensus<br>Engine"]

Consensus --> ResourceBorrowing["Resource Borrowing<br>(AI Agent Coordination)"]

ResourceBorrowing --> API_A

ResourceBorrowing --> API_B

ResourceBorrowing --> API_N

end

Diagram Explanation:

graph

subgraph ASKA Zone A

direction LR

IES_A["IES Cluster (P1)"] --> Agent_A["AI Agent<br>(P16, P35)"]

Agent_A --> LocalPolicyA["Local Policy<br>Optimization (P16)"]

User_A["User (MFA)"] --> OverrideA["Policy Override"]

OverrideA -.-> LocalPolicyA

LocalPolicyA --> DLT_A["Decentralized Ledger (P13,P15)"]

LSM_A["Local<br>MSM (P2)"] -.-> IES_A

LSM_A -.-> Agent_A

end

subgraph ASKA Zone B

direction LR

IES_B["IES Cluster (P1)"] --> Agent_B["AI Agent<br>(P16, P35)"]

Agent_B --> LocalPolicyB["Local Policy<br>Optimization (P16)"]

User_B["User (MFA)"] --> OverrideB["Policy Override"]

OverrideB -.-> LocalPolicyB

LocalPolicyB --> DLT_B["Decentralized Ledger (P13,P15)"]

LSM_B["Local<br>MSM (P2)"] -.-> IES_B

LSM_B -.-> Agent_B

end

subgraph ASKA Zone N

direction LR

IES_N["IES Cluster (P1)"] --> Agent_N["AI Agent<br>(P16, P35)"]

Agent_N --> LocalPolicyN["Local Policy<br>Optimization (P16)"]

User_N["User (MFA)"] --> OverrideN["Policy Override"]

OverrideN -.-> LocalPolicyN

LocalPolicyN --> DLT_N["Decentralized Ledger (P13,P15)"]

LSM_N["Local<br>MSM (P2)"] -.-> IES_N

LSM_N -.-> Agent_N

end

subgraph "Global Policy & Resource Mgmt"

direction LR

DLT_A --> Sync["Secure Sync<br>(P22)"]

DLT_B --> Sync

DLT_N --> Sync

Sync --> GlobalPolicy["Global Policy<br>Database"]

GlobalPolicy --> Hub["ASKA Hub"]

Hub --> ResourceMgr["Resource Manager<br>(P9, P10)"]

AESDS["AESDS (P16)"] --> Hub

MDATS["MDATS (P17)"] --> GlobalPolicy

ResourceMgr --> ZoneA

ResourceMgr --> ZoneB

ResourceMgr --> ZoneN

end

ZoneA["Zone A Resources"] -.-> IES_A

ZoneB["Zone B Resources"] -.-> IES_B

ZoneN["Zone N Resources"] -.-> IES_N

Hub --> ZoneA

Hub --> ZoneB

Hub --> ZoneN

Explanation and Justification:

Okay, let's brainstorm! No judgment, just rapid-fire ideas for expanding the ASKA patent portfolio, synthesizing our existing patents (particularly the novel aspects of 29, 30, and 31) and fresh concepts:

This list is a starting point; each idea can be further refined and expanded.

Okay, building on the exciting potential of ideas 6 (MPC in DTMS for privacy-preserving trust negotiations) and 10 (extending spatiotemporal digests to more physical parameters), here are ten more brainstorming ideas in a similar vein, aiming for high novelty and synergy:

These ideas leverage the strengths of ASKA while focusing on enhancing privacy and security aspects by incorporating techniques of differential privacy and secure multiparty computations (MPC) in novel and sophisticated combinations of methods that address significant and challenging scenarios in many practical and complex usage models. Remember, each of these requires thorough prior art searching before being developed and prioritized.

Applicant: Paul Lowndes / NSHkr

Date: October 26, 2023

Field of the Invention: This invention relates to the field of digital content authentication and integrity verification, particularly for raster data such as audio, images, and video.

Background of the Invention:

Existing methods for verifying the authenticity and integrity of digital raster content often rely on cryptographic hashing algorithms or digital signatures applied to the raster data itself. However, these methods are vulnerable to manipulation if the original raster data is altered and then re-signed. Furthermore, there is a need for a method to link raster data definitively to the reality from which it was captured. Current attempts to achieve such verification, such as those employing image signature mechanisms from a consortium of camera developers, often lack the robustness and generality required for widespread application and fail to address the possibility of sophisticated manipulation of the original capture environment itself.

Summary of the Invention:

This invention provides a novel system and method for verifying the authenticity and integrity of raster content by generating a spatiotemporal digest that represents the physical environment from which the raster content was captured. The spatiotemporal digest is derived from detailed sensor measurements of the physical environment, including a plurality of physical parameters. The digest generation process, based on proprietary theoretical and experimental research, exhibits a provable lack of isomorphism between the raw sensor data and the resulting digest. This digest creates a strong, one-way link between the raster content and the physical reality it represents. The invention further incorporates traditional cryptographic signature verification methods as a secondary layer of protection, functioning independently from the spatiotemporal digest mechanism. This dual-layered approach provides enhanced security and facilitates legal verifiability.

Detailed Description of the Invention:

The invention comprises a system including:

Claims:

This provisional patent application is filed to protect the novel aspects of this invention, primarily the system and method of generating and using a spatiotemporal digest for verifying the authenticity and integrity of raster content. The specific details of the proprietary algorithm remain undisclosed at this time but are covered under this application. Future patent applications will describe specific implementations and algorithms in greater detail.

Spatiotemporal Digest for Raster Content Verification has several compelling integration points within ASKA that could significantly enhance its security and utility. Here's a breakdown of potential integrations and their advantages:

1. HESE-DAR Integration for Secure Digest Storage and Processing:

2. DTMS Integration for Trust-Based Access Control:

3. Multi-Channel Network for Secure Communication of Sensor Data and Digests:

4. MDATS Integration for Audit Trails of Verification Processes:

5. AESDS Integration for Automated Updates and Security Patching:

6. IAMA Integration for Legacy System Compatibility:

ASKA vs Raster Verification Use Cases:

A. ASKA Use Cases (Independent of Raster Verification):

B. Raster Content Verification System Use Cases (Independent of ASKA):

C. Overlapping Use Cases and Synergies:

The overlap arises in scenarios where both high security and verifiable raster content are required:

D. Practical Implementations and Integration:

This staged approach allows for practical implementation and incremental adoption, starting with professional applications and gradually expanding to consumer devices as the technology matures. The integration of the spatiotemporal digest with ASKA significantly enhances security and trustworthiness, providing strong guarantees about the integrity and authenticity of raster content in sensitive applications.